TL;DR: A baseball game in Atlanta led to some ideas for visual scorecards, performance-above-replacement metrics, and effort-normalised evaluation that seem useful/interesting.

I have been in Atlanta for the last few days teaching at SISMID (course materials with some really good (not biased) new forecasting content and other tweaks) with Nick Reich and Thomas Robacker. It was a lot of fun even if Atlanta is about 300% too hot for me.

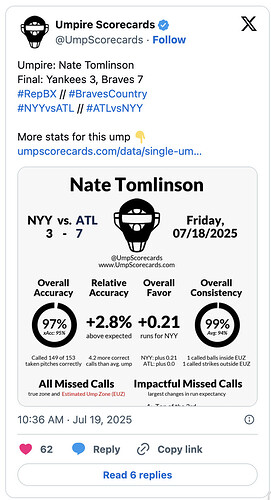

Nick was kind enough to organise a trip to the Braves vs Yankees baseball game, which was super fun. Something that really got to me was the (famously) large amount of stats everywhere, especially on the big screens where new batters were shown with their summary stats (and another similar one for the pitcher).

This got me thinking about how nice it would be to have something like this for infectious disease forecasting models that people could use in their READMEs to summarise performance, or forecast hubs could use as a way of visually summarising a model.

We then had to escape the stadium and naturally, this meant camping in a car park for a bit whilst all the traffic cleared. This gave me some time to think about how these score cards might interact with the kinds of model cards that gen AI has started using. These are basically YAML formats that go in your README that contain model and performance metadata following certain standards.

I naturally then spent a lot of my free day in Atlanta thinking about this whilst zipping up and down the beltline. The conclusion of this is that I think there is a fairly natural way to express this as an extension of scoringutils that outputs both a model card (i.e. YAML) and a scorecard (an image). Claude and I have been iterating on a design document and I am very keen for feedback. Importantly, I am keen to know if there are examples of this kind of thing in the wild, as it’s really a very general and non-domain-specific concept, so it feels like there very much might be.

The score card prototype looks like:

┌─────────────────────────────────────────────────────────────────┐

│ [Logo] MODEL NAME PAR: +2.3%/+1.9% (overall) │ <- Header (Rows 1-3)

│ Team/Organization Nat: ▁▃█▂ (1-4w) │

│ Log: ▂▄█▃ (1-4w) │

├─────────────────────────────────────────────────────────────────┤

│ ┌──────────┬──────────┬──────────┬──────────┬────────────────┐│ <- Metrics (Rows 4-8)

│ │Coverage │ WIS │ Rel │ Bias │ Ensemble ││

│ │ 50%: 48%↓│ Nat: 42↑│ Skill │ -0.02 ↓ │ Contrib ││

│ │ 90%: 87%↓│ Log:0.38│Nat: 0.95↑│ │ Nat: +3.2%↑ ││

│ │ │ │Log: 0.87↑│ │ Log: +2.8%↑ ││

│ └──────────┴──────────┴──────────┴──────────┴────────────────┘│

├─────────────────────────────────────────────────────────────────┤

│ [Performance Timeline Graph - Model vs Others] │ <- Timeline (Rows 9-11)

├─────────────────────────────────────────────────────────────────┤

│ Forecasts: 127 | Since: 2023-01 | Target Coverage: 95% │ <- Footer (Row 12)

│ Best: 2-week ahead | Most consistent Q3 2024 │

└─────────────────────────────────────────────────────────────────┘

A concern is that this is meant to be fun and as I have iterated it has become progressively less fun and more information dense. Definitely something to watch for. I think maybe adding the plots and logos will edge this back in the fun direction.

We also had a good chat about the types of stats that are widely used in baseball and the main one I thought was interesting was performance above replacement. This as I understand it is weighing all the actions players take vs the average action as a way of assessing value (I would like a version of this that is weighted to player cost - more on this in a second). There are things like this that we do (i.e. value to an ensemble where you look at ensemble performance with and without your model) but I think nothing quite like it. I think we could get closer if we looked at permutations of models in ensembles (as both @sbfnk and co and Spencer Fox and co have been doing recently). So this would look like taking ensemble performance with our model and dividing by the mean performance of all possible ensembles created by removing our model and instead duplicating another component model. A caveat is I haven’t really thought through how this relates to other measures we already have (i.e. the ensemble with and without, just taking relative skill etc.). A nuance here is you might want to check replacement by some sub strata or categories (i.e. replacement across renewal models etc. but I think except in very large forecasting problems with lots of problems this is likely to run into issues.

If it does shake out as being useful, then as with baseball, I like the idea of trying to normalise against effort. I have had a bugbear for a while at how we confound model performance by modelling effort. All the forecasting I have done points to a relationship between having more time to spend reviewing, iterating and just looking at the forecasts and how you do in evaluations. This should mean that well-resourced teams that focus heavily on forecasting do very well, whilst less resourced teams do less well. There is also some interaction with model complexity as a more complex model takes more time and thus leaves less effort for the other forecasting tasks that might be the thing driving performance. This would bias us towards simpler models (it’s convenient I think this isn’t it). Another way to think about this is an “house effect” where all models from a team should have some common performance aspects and some that vary.

Something to note is we can also get at this doing model-based evaluation (i.e. adjusting for team size etc.) but this makes us need some fairly complex other ideas so having a good summary measure might be useful.

Summary

For me, the baseball statistics displayed at the game highlighted three gaps in how we evaluate forecasting models:

- Visual scorecards could make model performance more accessible than current approaches.

- Performance-above-replacement metrics might better capture model value within ensembles than existing measures.

- Effort-normalised evaluation could address the confounding between model performance and development resources.